At PrimeGroup, we strive to keep pace with the fast-moving world of Artificial Intelligence (AI). We are leveraging its many solutions to optimize our audiovisual and multimedia projects and ultimately provide better services for our clients. AI is without any doubt revolutionizing industries and enhancing people’s lives. Yet, there are worries about the potential ethical implications of this technology. Recently, the Italian Data Protection Authority prohibited ChatGPT due to anxieties around the unlawful gathering of personal data and the lack of safeguards against underage use. Over 4000 experts like Steve Wozniak, Elon Musk or historian Yuval Harari have also advocated for a pause of at least six months in the AI research because it’s becoming so invasive in many areas that is getting out of control. So it’s urgent that we establish some protocols to avoid that AI becomes a threat to our civilization. I’m Constantino de Miguel, CEO of PrimeGroup – thank you for your attention!

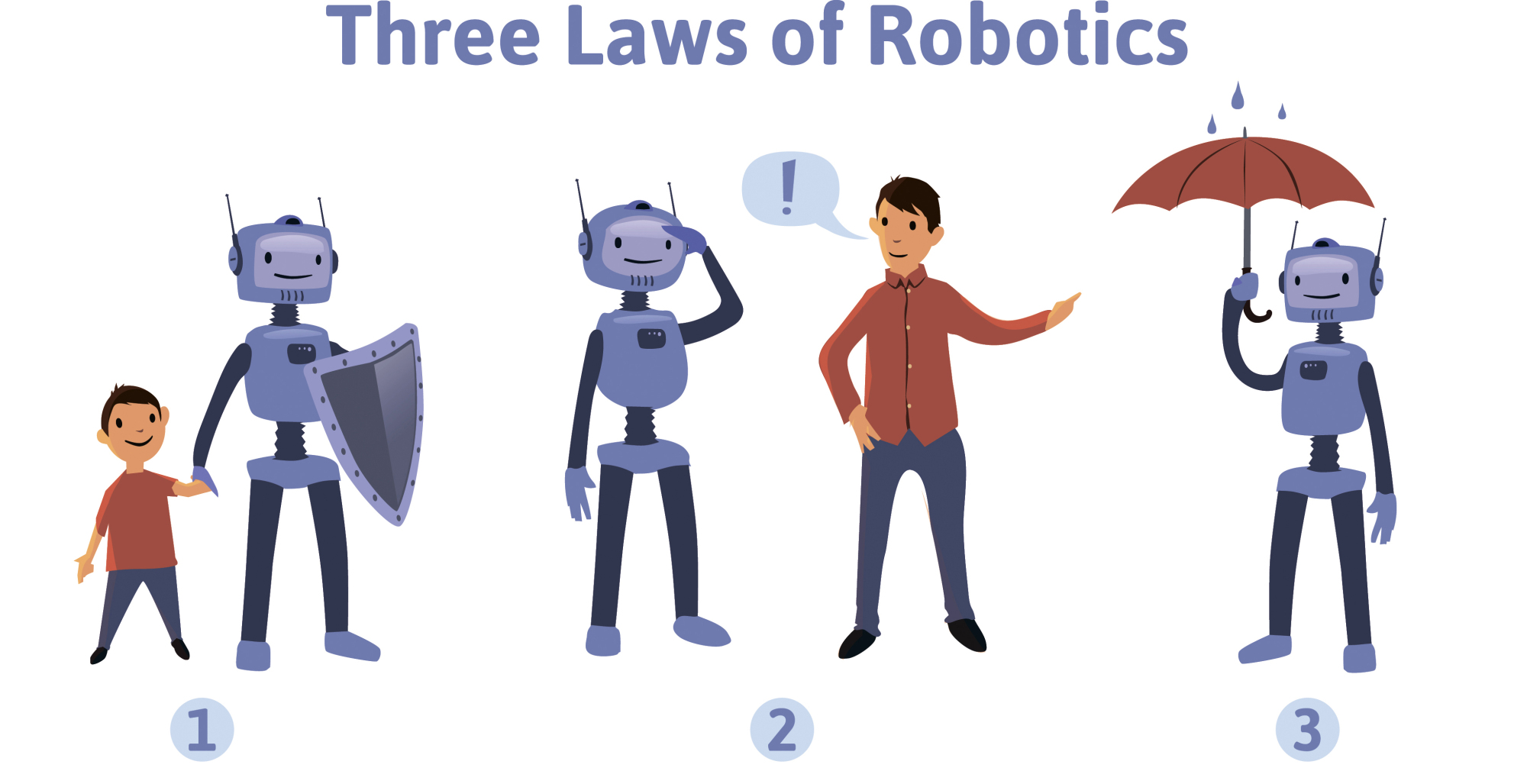

In his 1942 short story “Runaround”, Isaac Asimov outlined the “Three Laws of Robotics” as a way to harness the power of robots, namely…

It is prohibited for a robot to injure or allow a human to be injured through inaction.

As long as such orders do not conflict with the First Law, a robot must obey human orders.

As long as such protection does not conflict with the First or Second Laws, a robot must protect itself.

Several researchers and policymakers have advocated for similar ethical principles to be incorporated into the design and use of AI systems as a result of these laws.

Because should we allow this prodigious technology invades all communications channels, eliminating critical thinking and exposing ourselves to fake news and propaganda? Besides, AI can replace us in any decision-making process, so we could become obsolete. According to Goldman Sachs, automatic processes induced by Artificial Intelligence could improve but also replace over 300 million jobs in Europe and the US. The GDP there could increase by 7% in the coming years, thanks to AI. And companies want to take advantage of the AI potential to increase their profits. Therefore, banning AI is not an option, or it’s too late to do it. So now the race is to ensure this technology is used ethically and responsibly. Let’s see what steps need to be taken to ensure that AI does good rather than harm.

As a first step, we need to establish ethical AI principles that can be used to ensure that AI systems are designed and used in accordance with values such as justice, equality, and freedom.

Secondly, we should ensure that AI systems are transparent and accountable: They must be easy to understand, open to scrutiny, and able to explain their decision-making processes. As a result, trust can be built in AI systems, and they can be used responsibly.

As a third step, we must encourage diversity and inclusion in AI development: Make sure AI development teams include as many perspectives from different cultures and languages as possible. By doing so, AI systems can be designed and tested with a broad range of perspectives and avoid unintentional biases.

The fourth consideration is to avoid perpetuating existing prejudices and misconceptions by developing bias-free algorithms and ensuring that the data used to train AI systems is rich, diverse, and representative.

Fifth, as with Asimov robotics, we should ensure that AI systems are subject to human supervision and intervention when necessary. This will prevent AI systems from making decisions that could harm humans or violate ethical principles.

As a final step, we should engage AI systems in a public debate, rather than relying on experts or technocrats to make decisions for us. We should encourage public debate about the development and deployment of AI systems.

By applying all five principles, we can ensure that this wonder of AI is a genius not only under our control, but also that is used in a way that aligns with society’s values and priorities.